Group Introduction

Scientific Profile

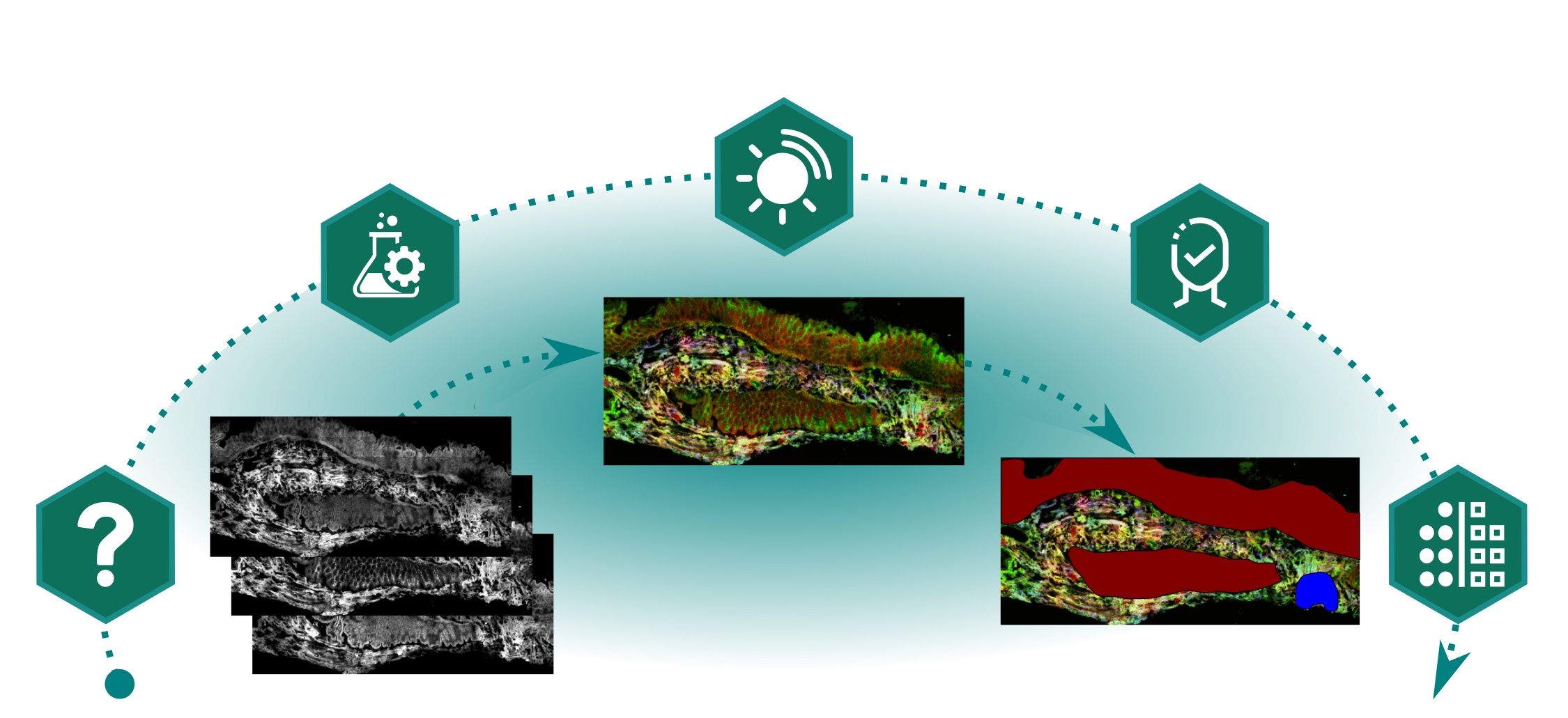

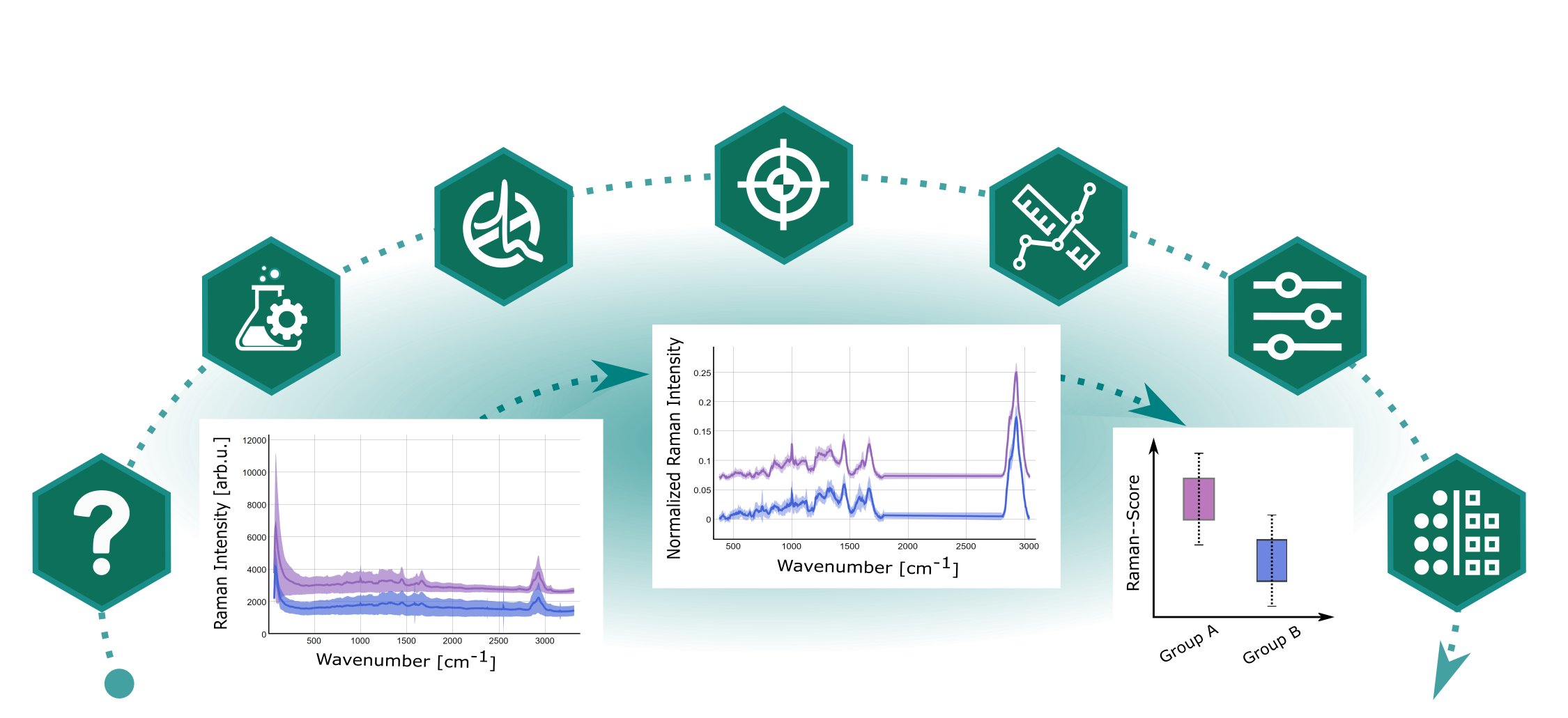

We explore the entire data life cycle of photonic data from generation to the data analysis and to data archiving. Following a holistic approach, we investigate procedures for experiment and sample size planning as well as data pretreatment and combine these procedures with chemometric procedures, model transfer methods and artificial intelligence methods in a data pipeline. In this way, data from various photonic processes can be used for analysis, diagnostics and therapy in medicine, life science, environmental sciences and pharmacy. The data pipeline are implemented in software components and are tested directly in the applicative environment, e.g. in clinical studies.

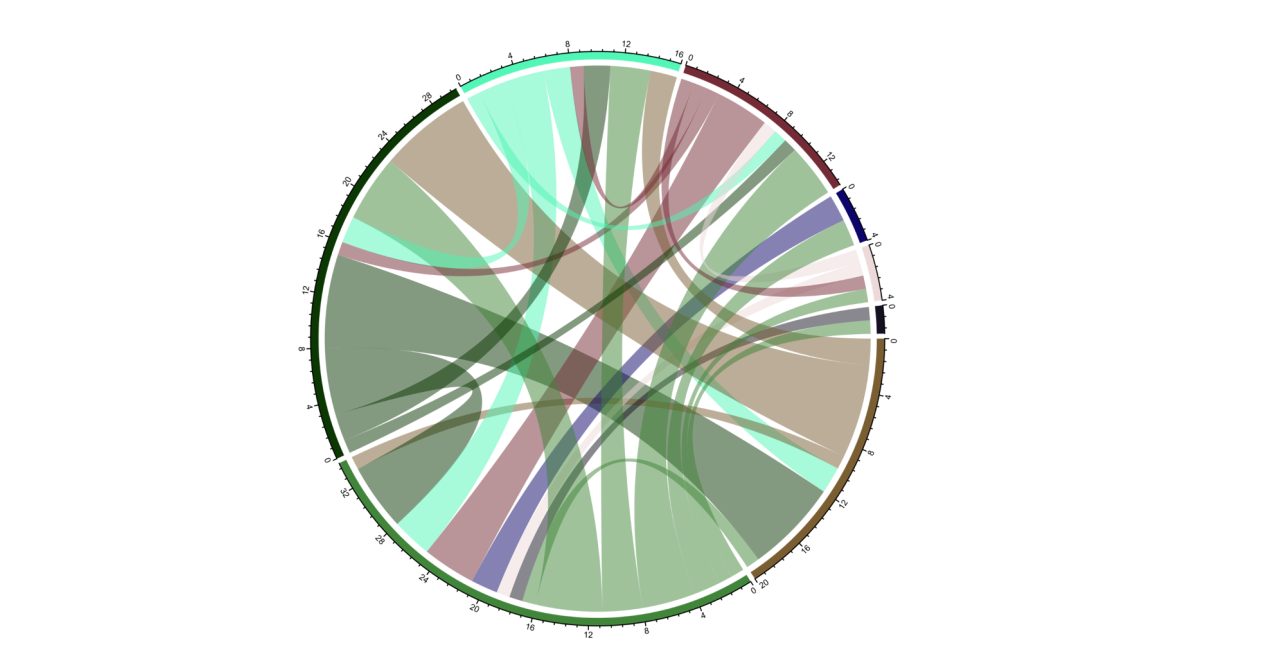

Further focal points are data fusion of different heterogeneous data sources, the simulation of different measurement procedures in order to optimize correction procedures, methods for the interpretation of analysis models and the construction of data infrastructures for different photonic measurement data, which ensure the FAIR principles.

Research Topics

- Machine learning for photonic image data

- Chemometrics / machine learning for spectral data

- Correlation of different measurement methods and data fusion

Addressed Application Fields

- Bio-medical diagnostics using spectral measurement methods and imaging techniques

- Extraction of higher information from photonic measurement data

- Simulation- und data-driven correction of photonic data

- Guarantee of FAIR principles for photonic data